Six ways I use AI when writing

And four ways AI fails me

[This is a modified version of a post I posted a few months ago for paid subscribers only. Big thanks to the commenters for helpful thoughts and suggestions.]

Tyler Cowen recently said he regularly uses generative AI when working, just as many of us now use Google. I've been trying to do the same.

As I write this, I have Claude open in front of me. This is partly because I want to become fluent in using AI—it will be increasingly important in our daily lives. But I also get immediate benefits. Claude helps improve my writing and my work more generally.

It took a while, though, to understand what Claude does well. In Co-Intelligence: Living and Working with AI, Ethan Mollick pointed out that many people start with the wrong idea of what AIs are good at. We know traditional computers excel at precise, logical tasks such as doing math and looking up information, and they do poorly at creative tasks like telling stories and brainstorming ideas. We tend to assume that LLMs will do the same. And this is mistaken—in fact, the reverse is usually true.

So here’s what I learned. Please leave further suggestions in the comments, and also let me know if you think that systems other than Claude—Gemini, Copilot, Grok, the many versions of ChatGPT, etc.—would serve as better writing assistants.

What it’s good at

Sentence improvement. I don’t use Claude to write for me. (More on this below.) But sometimes, I’ll have a sentence or paragraph I’m not happy with, and I’ll throw it at Claude to make suggestions. I never just paste what it suggests, but the feedback is often helpful.1

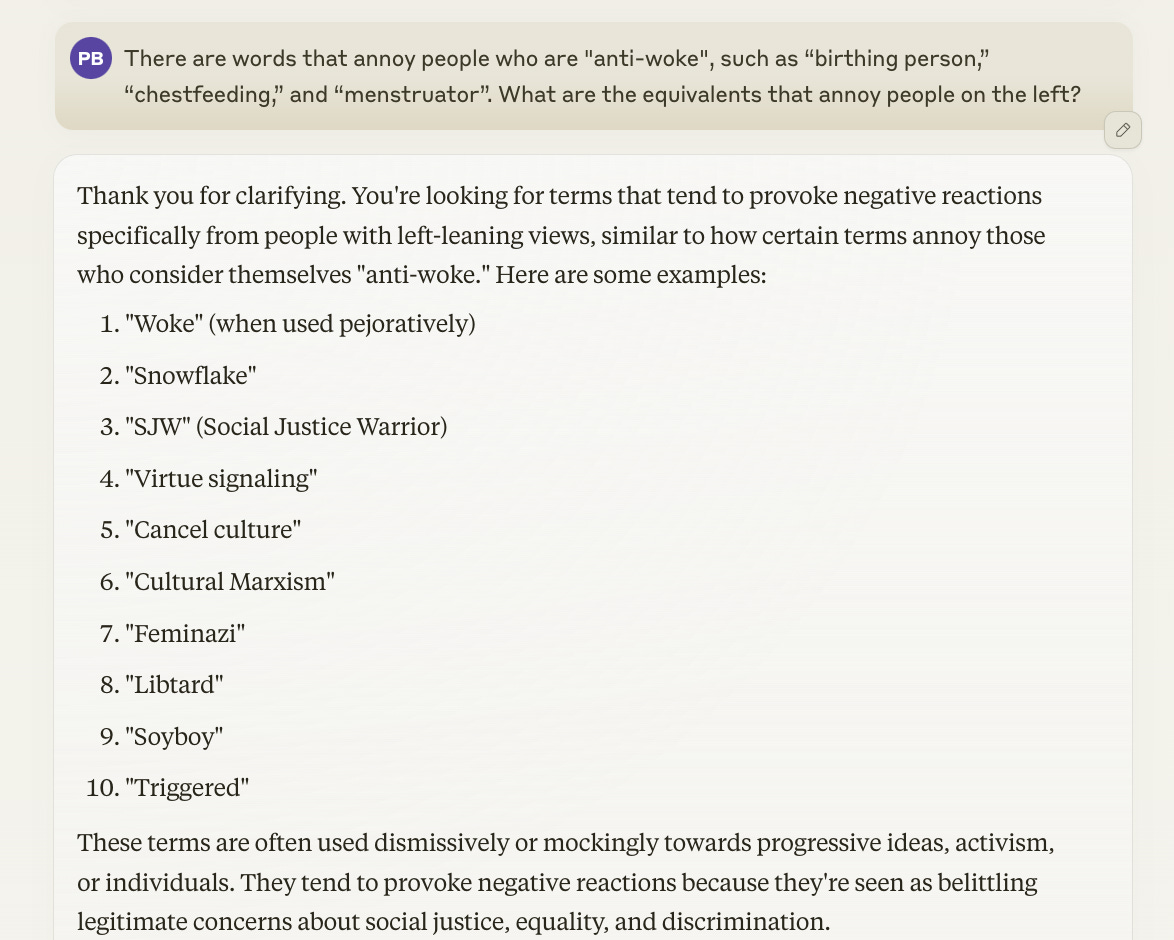

Examples. Claude is terrific at providing examples. For a post on social change, I had several examples of words that annoy the “anti-woke,” but I found it harder to think of good examples of words that annoy people on the left. Claude had useful suggestions.

Titles and subtitles. Claude is good at providing titles, subtitles, section headings, etc. Its suggestions are never that clever, but if you feed in your text (just cut and paste, or use the “attachment” button), and ask for 20 good titles, you’ll often find something worth using, maybe in a modified form. (You can guide it by asking for a title that’s funny, or that’s a play on words, or that includes a literary reference.)

You can also ask it questions like “Which is the better title, X or Y?” When I first did this, I expected it to be wishy-washy (X is better in some ways; Y in others); I was surprised that it often has strong opinions (X is much better than Y because …), and they tend to be reasonable.

Tutorials. Claude is really good at explaining things. One tip is that if you find an explanation tough-going, try asking something like, “Explain blah blah to me like I’m a smart 12-year-old.”2 It’s good at giving further examples and answering follow-up questions, and, of course, it is infinitely patient and never asks me why I’m so slow at picking things up.

Reverse outlines. For a long piece, it’s helpful to see the overall structure of your argument. Asking Claude for a reverse outline is a good way to do this.

Critiques. I’ll often give it something I’ve written and ask: “So, what do you think? What would my toughest critic say?” Now, its powers here are limited. It’s never said anything like “You’ve contradicted yourself here.” or “The evidence doesn’t support this argument of yours.” It gives more of a vibe-level response. It might tell me that I need to give more examples, say, or not appear too close-minded on a certain issue. This is nowhere near the level of the comments I’d get from a sharp colleague or friend, but it is still useful.

What it’s less good at

Actual writing. I know people who have AIs write first drafts of abstracts, grant proposals, and so on. I know others who have it rewrite entire texts. And I heard of someone who writes drafts of Substack articles, gives them to an AI, and says, “Make this spicier!” But I rarely have Claude write first drafts or mess directly with my text.3 Setting aside ethical concerns, I don’t like its style, and I don’t think other people like it either. It’s clear and coherent but never much of a pleasure to read.

Proofreading. I discuss this here. Before putting up a post, I run it through Claude and ask it to list typos and grammatical errors, and it usually finds some, so it’s not a waste of time. But it misses many mistakes, including obvious ones.

(Strangely, I found that if I follow up and write “Please try harder.”, it usually apologizes for being so sloppy the first time and then comes up with many mistakes it missed.)

Perhaps there is a better AI for this than Claude—any suggestions?

Getting quotations. It’s awful at this. I once asked Claude to find quotes from prominent scholars who say that social psychology is a disaster because of the replication crisis. It gave me two superb quotes, one from Nassim Taleb and the other from Gerd Gigerenzer. They would have ended up in a Wall Street Journal article if I hadn’t gone to Google and discovered that they were both fabricated.

I just made the same request again, wondering if the same two fake quotes would show up, and now it’s refusing to answer because it’s “not comfortable” with my request. Really, Claude, really?

Scientific research. My wife was exploring a research idea and asked ChatGPT whether a phenomenon she observed in children had previously been found in adults. Yes! said ChatGPT. Then, it cited several articles that it said supported her claim. But when she read the articles, it turned out that they didn’t find anything like the phenomenon she was looking for.

In general, Claude works too hard to give us what it thinks we want, making it an unreliable researcher. You can try to change its incentives (I once told ChatGPT, “You can’t make any mistakes here—if this isn’t the right citation, my boss will fire me!”, and this did make it more cautious.), but you can never fully trust it.

After finishing a draft of this post, I was reading Nate Silver’s new book, On the Edge: The Art of Risking Everything (highly recommended), and noticed that he thanks ChatGPT in the acknowledgments.

It was reassuring to see that Silver and I came to some of the same conclusions. We agree that AI is a good “creative muse” and does well at explaining technical topics. But it’s unreliable at fact-checking, and its prose style is lousy.

It’s only fair to give Claude a chance to reply.

This is too kind and so not very helpful. I followed up.

That’s better!

Is it just me, or do AIs suggest “delved” a lot?

I said “rarely”, not “never”. I sometimes have to fill out administrative forms that nobody will read, such as summaries of courses I’ve already taught or descriptions of job candidates who have already been hired. I might throw a syllabus or CV at Claude, ask for something that’s the right number of words, give it a quick look, and send it in. As Abraham Maslow once put it, What’s not worth doing isn’t worth doing well.

I haven’t yet tried this myself, but I have friends who use LLMs to write reference letters. You give it several reference letters you’ve already written so that it gets a sense of your style, feed in some notes about the new person, and then you’re done. It’s not so different from how some professors always write reference letters, which is to take a similar letter they’ve already written and modify it for the new person.

I might use AI more for these tasks in the future. But I promise you that this Substack will always be purely human-made.

Thanks a lot for this post! As someone who has been doing some work on the use of AI tools for research in the social and behavioral sciences (resulting in a couple of workshops/talks and publications), I find this very interesting.

Regarding your hunch about the word "delve(d)", a recently published preprint provides some systematic empirical evidence for this:

Kobak, D., Márquez, R. G., Horvát, E.-Á., & Lause, J. (2024). Delving into ChatGPT usage in academic writing through excess vocabulary (Version 1). arXiv. https://doi.org/10.48550/ARXIV.2406.07016

Examples in (2) seem to kind of miss the point, those all seem like pejoratives, so of course they're annoying.

IMO should be something like "patriot", "family values", "taxpayer", "personal responsibility" etc.